Why Replication Science?

The Challenge

Replication has long been a cornerstone for establishing trustworthy scientific results. At its core is the belief that scientific knowledge should not be based on chance occurrences. Rather, it is established through systematic and transparent methods, results that can be independently replicated, and findings that are generalizable to at least some target population of interest (Bollen, Cacioppo, Kaplan, Krosnick, & Olds, 2015).

Given the central role of replication in the accumulation of scientific knowledge, researchers have reevaluated the replicability of seemingly well-established findings. Results from these efforts have not been promising. The Open Science Collaboration (OSC) replicated 100 experimental and correlational studies published in high impact psychology journals (2015). Overall, the OSC found that only 36% of these efforts produced results with the same statistical significance pattern as the original study. The findings prompted the OSC authors to conclude that replication rates in psychology were low but not inconsistent with what has been found in other domains of science. These results contribute to a growing sense of a "replication crisis" occurring in multiple domains of science, including marketing (Madden, Easley, & Dunn, 1995), economics (Dewald, Thursby, & Anderson, 1986; Duvendack, Palmer-Jones, & Reed, 2017), education (Makel & Plucker, 2014), and prevention science (Valentine et al., 2011).

Despite consensus on the need to promote replication efforts, there remains considerable disagreement about what constitutes as replication, how a replication study should be implemented, how results from these studies should be interpreted, and whether direct replication of results is even possible (Hansen, 2011).

Existing Approaches to Replication

Over the years, researchers have sought to clarify what is meant by a replication. Most prior definitions have focused on repeating methods and procedures in conducting a replication study (Schmidt, 2009). For instance, Brandt et al. (2014, p. 218 emphasis in original) define direct replications as studies “that are based on methods and procedures as close as possible to the original study.” Zwaan et al. (2017, p. 5) describe replication as “studies intended to evaluate the ability of a particular method to produce the same results upon repetition.” Nosek and Errington (2017, p. 1) define replication as “independently repeating the methodology of a previous study and obtaining the same results.” Others have also emphasized the need for replication procedures to be carried out by independent researchers (Simons, 2014) and on independent samples of participants (Lykken, 1968).

Procedure-based approaches to replication focus on ensuring that the same methods and tools are used in the original and replication studies. Thus, the quality of the replication is judged by how closely it is able to repeat methods and procedures from the original study (Brandt et al., 2014; Kahneman, 2014). Despite this seemingly straight-forward approach to replication, procedure-based replications pose multiple challenges for implementation in field settings. First, the original study may fail to report all relevant and necessary methods and procedures, rendering direct replications difficult if not impossible to achieve (Hansen, 2011). Second, this type of replication privileges methods and procedures in the original study, but the original study itself may not have been well implemented. Thus, replicating flawed methods and procedures from the original study may not be feasible or even desired. Third, procedure-based approaches are inherently challenging because the repetition of methods itself is rarely the goal of any replication effort. More important is whether the intervention under consideration has a reliable and replicable causal effect on the outcome of interest. In procedure-based approaches, the causal effect of interest is usually not well-defined by the researcher, it can only be guessed from an accurate description of procedures and methods used. As a result, it may be challenging for the researcher to assess whether the replicated procedures and methods are similar enough to the original study and whether they are appropriate for replicating the same causal effect.

Developing the Methodological Foundations of a Replication Science

To develop the methodological foundations of a replication science, we draw insights from the fields of causal inference, statistics, and data science. The approach relies on the Causal Replication Framework that defines conditions (or assumptions) for two (or more) studies to produce the same causal effect within the limits of sampling error (Wong & Steiner, 2018). Key advantages of this approach are that it identifies clear causal quantities of interest across replication studies, as well as demonstrates assumptions needed for the design, implementation, and analysis of high quality replication studies.

Conceptualizing replication through the Causal Replication Framework yields two important implications for practice in the planning of replication studies. First, although assumptions for the direct replication of results are stringent, it is possible for researchers to address and probe these assumptions through the thoughtful use of research designs and through diagnostic probes. A second implication of the framework is that research designs may be used to identify potential sources of effect variation by systematically violating one or multiple assumptions (while meeting all other assumptions). In this approach, a prospective systematic replication design may be applied to address all other replication design assumptions with the exception of the one that is under investigation. If results are found to not replicate, the researcher will know why there was a difference in effects. Importantly, under the Causal Replication Framework, failure to replicate results is not inherently a problem for science, as long as the researcher is able to identify why. That is, replication failure may also be conceptualized as effect variation, which must be understood for generalizing to broader target populations of interest.

Creating the methodological foundations for a replication science.

Our work to developing a methodological foundation for replication science four research aims. The core of the work begins with the Causal Replication Framework. From there, we derive individual research designs for conducting systematic replication and testing sources of effect variation. To identify multiple sources of variation, we incorporate a series of replication research designs for testing one or more replication assumption. Finally, our team is working on developing new methods for analyzing replication designs and for evaluating sources of effect variation. To assess the feasibility of these approaches, we work in collaboration with substantive education researchers in special education, teacher preparation, reading, and early childhood education to conduct replication studies.

A Multi-Disciplinary Effort

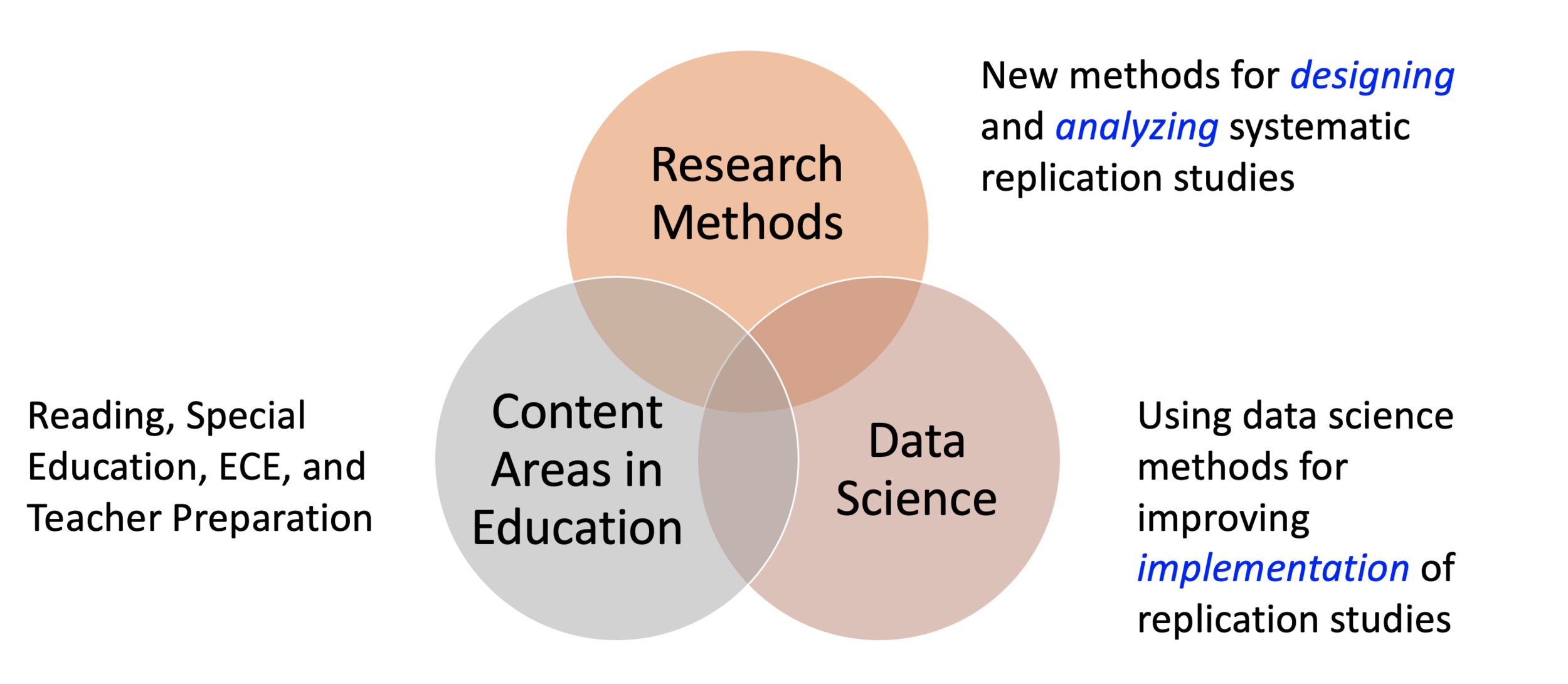

To design, implement, and analyze high quality replication studies is a collaborative effort that requires expertise from multiple disciplines. Methodological expertise is needed for designing and analyzing findings for generating interpretable results. Substantive expertise is needed for defining critical questions for the field, for identifying conditions that may facilitate or challenge the implementation of studies, and for interpreting results. Given that replication studies are large-scale data collection efforts that often include multiple sites, investigators, and implementation approaches, data science approaches are also needed for efficiently collecting, processing, and sharing information for openness and transparency.

Systematic Replication Studies from our Lab

The Collaboratory Replication Lab has partnered with education researchers in teacher preparation, special education, and in reading to design, implementation, and analyze systematic replication studies based on the Causal Replication Framework. Examples include:

Citations

Bollen, K., Cacioppo, J., Kaplan, R., Krosnick, J. A., & Olds, J. L. (2015). Social, Behavioral, and Economic Sciences Perspectives on Robust and Reliable Science. Report of the Subcommittee on Replicability in Science Advisory Committy to the National Science Foundation Directorate for Social, Behavioral, and Economic Sciences.

Brandt, M. J., IJzerman, H., Dijksterhuis, A., Farach, F. J., Geller, J., Giner-Sorolla, R., … van ’t Veer, A. (2014). The Replication Recipe: What makes for a convincing replication? Journal of Experimental Social Psychology, 50(1), 217–224. https://doi.org/10.1016/j.jesp.2013.10.005

Dewald, W. G., Thursby, J. G., & Anderson, R. G. (1986). Replication in Empirical Economics: The Journal of Money, Credit and Banking Project. The American Economic Review, 76(4), 587–603. Retrieved from http://www.jstor.org.proxy01.its.virginia.edu/stable/1806061

Duvendack, M., Palmer-Jones, R., & Reed, W. R. (2017). What Is Meant by “Replication” and Why Does It Encounter Resistance in Economics? American Economic Review, 107(5), 46–51. https://doi.org/10.1257/aer.p20171031

Hansen, W. B. (2011). Was Herodotus Correct? Prevention Science, 12(2), 118–120. https://doi.org/10.1007/s11121-011-0218-5

Kahneman, D. (2014). A new etiquette for replication. Social Psychology, 45(4), 310-311.

Lykken, D. T. (1968). Statistical significance in psychological research. Psychological Bulletin, 70(3), 151–159. https://doi.org/10.1037/h0026141

Madden, C. S., Easley, R. W., & Dunn, M. G. (1995). How Journal Editors View Replication Research. Journal of Advertising, 24(4), 77–87. https://doi.org/10.1080/00913367.1995.10673490

Makel, M. C., & Plucker, J. A. (2014). Facts Are More Important Than Novelty: Replication in the Education Sciences. Educational Researcher, 43(6), 304–316. https://doi.org/10.3102/0013189X14545513

Nosek, B. A., & Errington, T. M. (2017). Making sense of replications. ELife, 6. https://doi.org/10.7554/eLife.23383

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349, aac4716. doi:10.1126/science.aac4716

Schmidt, S. (2009). Shall we really do it again? The powerful concept of replication is neglected in the social sciences. Review of General Psychology, 13(2), 90–100. https://doi.org/10.1037/a0015108

Simons, D. J. (2014). The Value of Direct Replication. Perspectives on Psychological Science, 9(1), 76–80. https://doi.org/10.1177/1745691613514755

Valentine, J. C., Biglan, A., Boruch, R. F., Castro, F. G., Collins, L. M., Flay, B. R., … Schinke, S. P. (2011). Replication in Prevention Science. Prevention Science, 12(2), 103–117. https://doi.org/10.1007/s11121-011-0217-6

Wong, V. C., & Steiner, P. M. (2018). Replication Designs for Causal Inference. EdPolicyWorks Working Paper Series. Retrieved from http://curry.virginia.edu/uploads/epw/62_Replication_Designs.pdfhttp://curry.virginia.edu/edpolicyworks/wp

Zwaan, R., Etz, A., Lucas, R. E., & Donnellan, B. (2017). Making Replication Mainstream. https://doi.org/10.31234/OSF.IO/4TG9C