Design Replication Studies for Evaluating Non-Experimental Methods

Introduced in the 1980s by LaLonde (1986) and Fraker and Maynard (1987), design replication studies (also called “within-study comparison” designs) evaluate whether a quasi-experimental approach (such as an observational study, a comparative interrupted time series design, or a regression-discontinuity design) replicates findings from a gold-standard RCT with the same target population. Here, the goal is to evaluate whether individual study assumptions under the Causal Replication Framework (unbiased identification of effects, unbiased estimation of effects) are met by comparing results from a non-experimental approach with an RCT benchmark while addressing all other CRF assumptions. In cases where the RCT benchmark is well-implemented and the non-experimental design fails to replicate RCT results, the researcher concludes that the identification assumption in the non-experiment was not met. That is, bias from the non-experimental method caused for results to not replicate across the two studies. Results from design replication studies over the last 30 years have been influential in shaping methodology policy, describing contexts and conditions under which quasi- and non-experimental methods are expected to yield unbiased results in field settings.

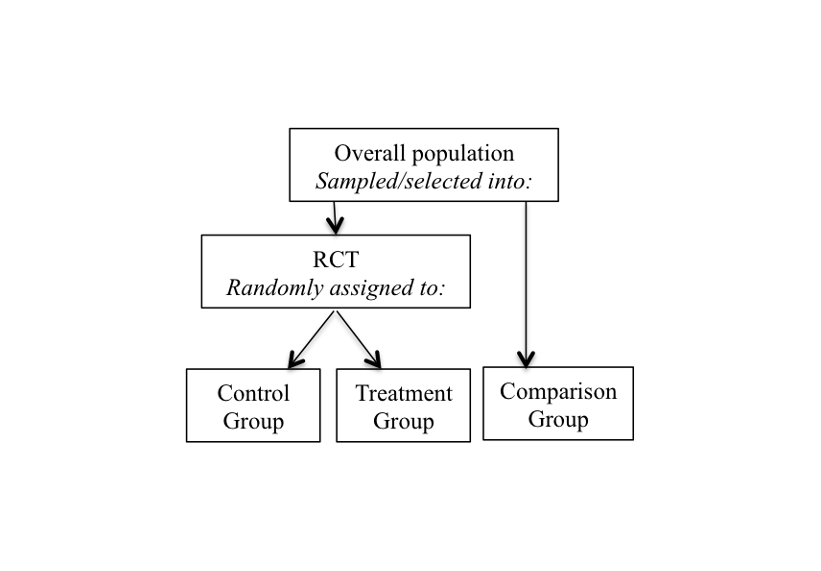

Example of a dependent-arm design replication study that shares the same experimental treatment group

Design Replication (Within-Study Comparisons) as Direct Replication Studies

Under the Causal Replication Framework, direct replication studies examine whether two or more studies with the same causal estimand produce the same effects. In design replication studies, the researcher attempts to ensure that the experimental benchmark and non-experiment share the same outcome measure and treatment conditions, and identifies effects for the same target population. This ensures that replication assumptions are addressed under the Causal Replication Framework. What’s varied is the research design used for identifying effect estimates across studies.

Wong and Steiner (2018) describe conditions under which replication failure may be interpreted as failure by the non-experimental method for producing unbiased effect estimates in applied settings. In a dependent-arm design (figure above), because RCT treatment units are shared across the experimental benchmark and the non-experimental studies, the treatment condition is the same across both studies. The researcher should also ensure that experimental control and non-experimental comparison conditions are the same — such that one group does not have alternative options for treatment — and that the same outcome measures are also being compared. This means that the same instruments should be used for assessing outcomes, and that they are administered in similar ways, in similar settings, on similar time frames from when the intervention was introduced. When these replication assumptions are met, the researcher compares results across studies with the same causal estimand. Differences in effect estimates may be interpreted as a failure of direct replication, or differences in results that arise from bias or incorrect reporting from individual studies.

Feasibility of Design Replication Studies

Over the last forty years, over 100 design-replication studies have examined the performance of non-experimental methods in field settings. They have been implemented in multiple fields in the social sciences, including in job training, education, criminology, political science, prevention science, and in health. These studies demonstrate that direct replication studies for identifying causal sources of variation in field settings are both feasible and desirable in evaluation contexts. They are also needed for helping researchers understand the conditions under which non- and quasi-experimental studies are likely to produce unbiased effects in applied settings.

Improving Methodology for Conducting Design Replication Studies

As statistical theory on NE methods continues to develop, more WSCs are needed to assess whether these methods are suitable for causal inference in field settings, that is, whether the underlying assumptions required for identification and estimation are likely to be met. Equally important is the need to identify conditions under which NE methods fail to produce credible results, and to probe identification and estimation assumptions that are violated in field settings.

Supported by the National Science Foundation, Wong and Steiner are leading a project that introduces assumptions for high quality design replication studies to yield valid and interpretable results, as well as recommends analytic methods for assessing correspondence in replication results. The project has a third goal of establishing infrastructure for conducting ongoing quantitative synthesis of results from empirical evaluations of NE methods. To this end, the project is conducting a meta-analysis of all design replication results, with the goal of providing an on-going living repository of design replication results for future research on non-experimental practice.

Further Reading

Learning from Design Replication Studies

Wong, V.C., Steiner, P.M., & Anglin, K. (2018). What Can We Learn from Empirical Evaluations of Non-experimental Methods? Evaluation Review. Advance online publication. https://doi.org/10.1177/0193841X18776870.

Wong, V.C., Valentine, J., Miller-Bain, K. (2017). Covariate Selection in Education Observation Studies: A Review of Results from Within-study Comparisons. Journal on Research on Educational Effectiveness, 10(1).

Steiner, P.M., Cook, T.D., Li, W., & Clark, M.H. (2015). Bias reduction in quasi-experiments with little selection theory but many covariates. Journal of Research on Educational Effectiveness, 8(4), 552-576. https://doi.org/10.1080/19345747.2014.978058.

Marcus, S.M., Stuart, E.A., Wang, P., Shadish, W.R., & Steiner, P.M. (2012). Estimating the causal effect of randomization versus treatment preference in a doubly-randomized preference trial. Psychological Methods, 17(2), 244-254.

Steiner, P.M., Cook, T.D., & Shadish, W.R. (2011). On the importance of reliable covariate measurement in selection bias adjustments using propensity scores. Journal of Educational and Behavioral Statistics, 36(2), 213-236.

Steiner, P.M., Cook, T.D., Shadish, W.R., & Clark, M.H. (2010). The importance of covariate selection in controlling for selection bias in observational studies. Psychological Methods, 15(3), 250-267.

Pohl, S., Steiner, P.M., Eisermann, J., Soellner, R., & Cook, T.D. (2009). Unbiased causal inference from an observational study: Results of a within-study comparison. Educational Evaluation and Policy Analysis, 31(4), 463-479.

Cook, T. D., Shadish, W. R., & Wong, V. C. (2008). Three Conditions under which Experiments and Observational Studies often Produce Comparable Causal Estimates: New Findings from Within-Study Comparisons. Journal of Policy Analysis and Management, 27(4), 724-750.

Cook, T. D., & Wong, V. C. (2008). Empirical Tests of the Validity of the Regression Discontinuity Design. Annals of Economics and Statistics. No. 91/92(July-December), pp. 127-150.

Shadish, W.R., Clark, M.H., & Steiner, P.M. (2008). Can nonrandomized experiments yield accurate answers? A randomized experiment comparing random to nonrandom assignment (with comments by Little/Long/Lin, Hill, and Rubin, and a rejoinder). Journal of the American Statistical Association, 103, 1334-1356.

Methodology for Design Replication Studies

Wong, V.C. & Steiner, P.M. (2018). Designs of Empirical Evaluations of Non-Experimental Methods in Field Settings. Evaluation Review. Advance online publication. https://doi.org/10.1177/0193841X18778918.

Steiner, P.M. & Wong, V.C. (2018). Assessing Correspondence between Experimental and Non-Experimental Results in Within-Study Comparisons. Evaluation Review. Advance online publication. https://doi.org/10.1177/0193841X18773807.

Examples of Design Replication Studies Conducted by the Lab

Shadish, W.R., Galindo, R., Wong, V.C., Steiner, P.M., & Cook, T.D. (2011). A Randomized Experiment Comparing Random to Cutoff-based Assignment. Psychological Methods, 16(2), 179-191.

Anglin, K., McConeghy, K., Miller-Bain, K, Wing, C. & Wong, V.C. (2020) Are Difference in Difference and Interrupted Time Series Methods an Effective Way to Study the Causal Effects of Changes in Health Insurance Plans? Ed Policy Works Working Paper.

Citations

Fraker, T., & Maynard, R. (1987). The Adequacy of Comparison Group Designs for Evaluations of Employment-Related Programs. The Journal of Human Resources, 22(2). https://doi.org/10.2307/145902

Lalonde, R. J. (1986). Evaluating the Econometric Evaluations of Training Programs with Experimental Data. The American Economic Review, 76(4), 604–620.